Rotated square Grid

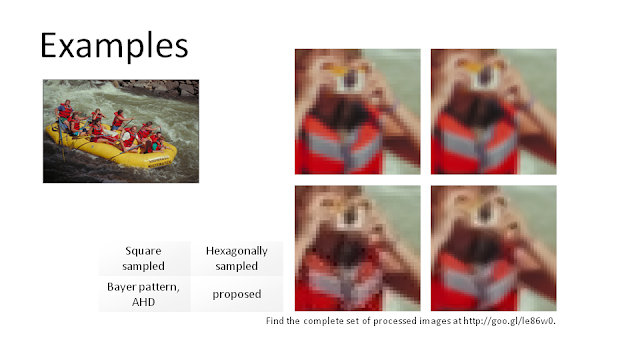

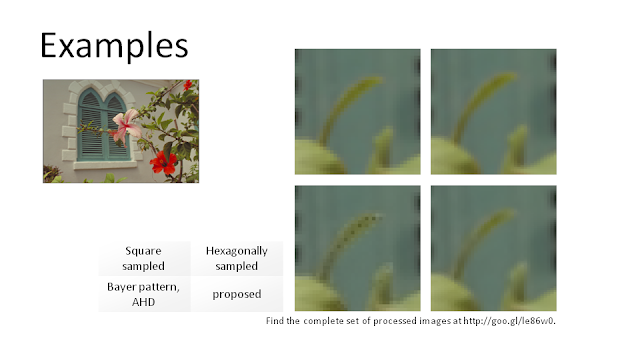

A simple internet search lead me to a paper with the title 'Demosaicking of Hexagonally-Structured Bayer Color Filter Array'. Seems like someone shares my interests in hexagonal grids and color filter arrays. And somehow the author figured out a way to apply something like a Bayer pattern to a hexagonal grid. That is indeed interesting! So I started reading the abstract and there it was:

"[...] The hexagonal CFA can be considered to be a 45-degree rotational version of the Bayer pattern [...]"

No, a hexagonal CFA cannot be considered as a rotated version of the Bayer pattern! Rotating a sqare grid (which is the basis for the Bayer pattern) results in a rotated square grid, not in a hexagonal grid!

Let me outline what I believe a hexagonal grid is: A (regular) hexagonal grid is one in which every element has six neighbors with the same distance. Drawing lines from the center of one of these neighbors to the next results in a regular hexagon. A hexagon is a shape with six sides and six corners. In the special case of a regular hexagon (which I assume is the most practical one) all sides have the same length and all corners have the same angle. The single elements don´t have to be hexagons necessarily, these may also be e.g. circles.

Well, that sounds a little more scientific than I wanted it to. To make it a little simpler you could also say in a hexagonal grid it should be possible to identify hexagons.

Coming back to the paper I have to say that I can´t read the content as it is Korean. However, the authors also provide an image of what they call a hexagonal color filter array.

Okay... in the abstract they say, they rotated the Bayer pattern to get a hexagonal pattern. This is not what I see here (Again, I have to emphasize that I can´t read the paper. I am just assuming that the figure demonstrates the process described in the abstract). What I see is that they rotated the grid and then changed the distance between the elements. I have no idea why they would do that as it makes the grids incomparable. But that´s another topic. The main point is: the figure to the right does not show a hexagonal grid. What is shows is Fuji´s SuperCCD design.

Fuji SuperCCD

Fuji´s SuperCCD sensors have often been described with 'hexagonal' or 'honeycomb'. But that´s not correct. SuperCCD is just a rotated square grid. Well, to make it look more interesting, the marketing guys have chopped off the corners of the squares to produce octagons (!), but these were still placed on a square grid.

The figure above illustrates the layout of the SuperCCD sensors. By rotating the grid Fuji´s scientists achieved to get a grid on which the green pixels were placed on a horizontally aligned square grid. Compared to that, the green pixels are placed on a rotated grid in the conventional Bayer pattern. The advantage of such a layout should be that horizontal and vertical lines (which are assumed to be dominant in natural images) may be reproduced better. However, my experience is that the exact opposite is the case. The missing green pixels are much easier to interpolate horizontally or vertically than diagonal. But there may be algorithms which can handle that.

The pixels´ octagonal shape is a pure marketing gag in my opinion. What would it help to reduce the light sensitive area? Fuji has always claimed that the SuperCCD sensors are more light sensitive than their competitors. Well, that is possible, but the reason for that is for sure not the octagonal shape of the pixels. Maybe it is because they found a way to decrease the distance between the pixels (which is not represented in the figure above) or they simply have better material than the others, but there´s no logical explanation why chopping off the pixel´s edges would increase sensitivity.

I think I am slightly off-topic...

Conclusion

What I´m trying to say is that neither SuperCCD, nor the layout from the paper on top is hexagonal. When I found articles on the internet ten years ago, describing SuperCCD as 'hexagonal', I was wondering, but I didn´t care too much. But when I find scientific work that was published only recently, in which those things are mixed up, then I am a little worried. I am not saying that the algorithm described in this paper is bad. I´m just saying that people who invent image processing algorithms should know the difference between a square and a hexagon. Especially in scientific work this differentiation should be taken serious. I believe that hexagonal image sampling and processing has great potential which is yet unused. I wish more people investigated in (real) hexagonal image science and publish their work. And those trying to find this work should not have to examine if a certain paper really deals with hexagonal image processing or has just a wrong title.